Hadoop is an open source framework for writing and running distributed applications that process large amounts of data.

The Hadoop platform was designed to solve problems where you have a lot of data.

Hadoop is designed to run on a large number of machines that don’t share any memory or disks. That means you can buy a whole bunch of commodity servers, slap them in a rack, and run the Hadoop software on each one.

MapReduce

MapReduce is a programming model for processing large data sets with a parallel, distributed algorithm on a cluster.

A MapReduce program comprises a Map() procedure that performs filtering and sorting (such as sorting students by first name into queues, one queue for each name) and a Reduce() procedure that performs a summary operation (such as counting the number of students in each queue, yielding name frequencies). The “MapReduce System” (also called “infrastructure”, “framework”) orchestrates by marshalling the distributed servers, running the various tasks in parallel, managing all communications and data transfers between the various parts of the system, providing for redundancy and fault tolerance, and overall management of the whole process.

The model is inspired by the map and reduce functions commonly used in functional programming, although their purpose in the MapReduce framework is not the same as their original forms. Furthermore, the key contribution of the MapReduce framework are not the actual map and reduce functions, but the scalability and fault-tolerance achieved for a variety of applications by optimizing the execution engine once.

A MapReduce job usually splits the input data-set into independent chunks which are processed by the map tasks in a completely parallel manner. The framework sorts the outputs of the maps, which are then input to the reduce tasks. Typically both the input and the output of the job are stored in a file-system. The framework takes care of scheduling tasks, monitoring them and re-executes the failed tasks.

How to setup Hadoop?

Linux is the official development and production platform for Hadoop, although Windows is a supported development platform as well. For a Windows box, you’ll need to install cygwin to enable shell and Unix scripts.

1. Go to “http://www.cygwin.com/”.

2. Click “Install Cygwin” link.

3. Select the exe file based on your computer capability.

4. Double click to install.

5. Click “Next”.

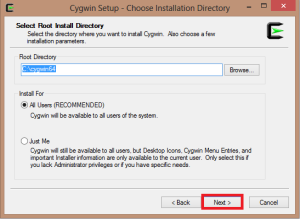

6. Select the root Directory.

7. Select the Local Package Directory.

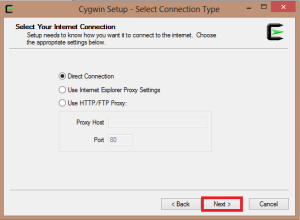

8. Select Internet Connection.

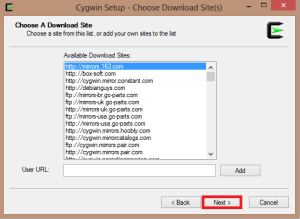

9. Select Site for Download.

10. Click “Next”.

11. Click “Next”.

12, Click “Finish” button.

Running Hadoop requires Java (version 1.6 or higher). Mac users should get it from Apple. You can download the latest JDK for other operating systems from Sun at http://java.sun.com/javase/downloads/index.jsp. Install it and remember the root of the Java installation, which we’ll need later.

To install Hadoop, first get the latest stable release at http://hadoop.apache.org/ core/releases.html. After you unpack the distribution, edit the script conf/hadoopenv.sh to set JAVA_HOME to the root of the Java installation you have remembered from earlier. For example, in Mac OS X, you’ll replace this line

# export JAVA_HOME=/usr/lib/j2sdk1.5-sun

with this line

export JAVA_HOME=/Library/Java/Home

Installing Hadoop:

1. Go to “http://hadoop.apache.org/releases.html”.

2. Click “Download”.

3. Click “Download Release Now”.

4. Click the “Mirror Link”.

5. Click “Stable”.

6. Select the file as given below.

Cheers!!